Technology Partner

Data Quality for Databricks

Proactively monitor the health of Databricks Lakehouse data with Lightup Data Quality, enabling data teams to quickly identify data issues and remediate incidents before downstream data processing and analytics services are rendered unusable.

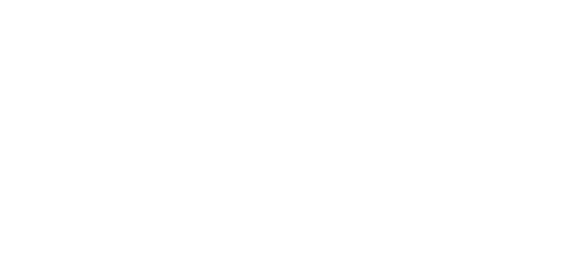

Data Quality Monitoring for Databricks

Lightup connects directly to Databricks, with full support for Unity Catalog, providing no-code, low-code, and custom SQL Data Quality Checks to ensure that data processed and analyzed in Databricks is correct, complete, and consistent — without moving or copying data the old-fashioned way.

The accuracy, precision, and reliability of Databricks data, AI/ML applications, analytics, and services depend on the quality of the data it processes. Maintaining high-quality data is absolutely critical for running workloads in Databricks with accurate output and trustworthy insights.

Ensure Trusted Data in Databricks

To help drive high confidence and trust in Databricks data, enterprises need modern Data Quality Monitoring tools that are powerful, easy-to-use, extensible, and deeply integrated with Databricks.

Enterprises turn to Lightup Data Quality for Databricks when they have complicated data quality requirements, unsolvable by legacy solutions, so they can:

- Accelerate time-to-market, deploying no-code and low-code assisted-SQL Data Quality Checks in minutes, not months.

- Identify data quality issues in real time, find the root causes, and remediate problems, preventing data outages, before they occur.

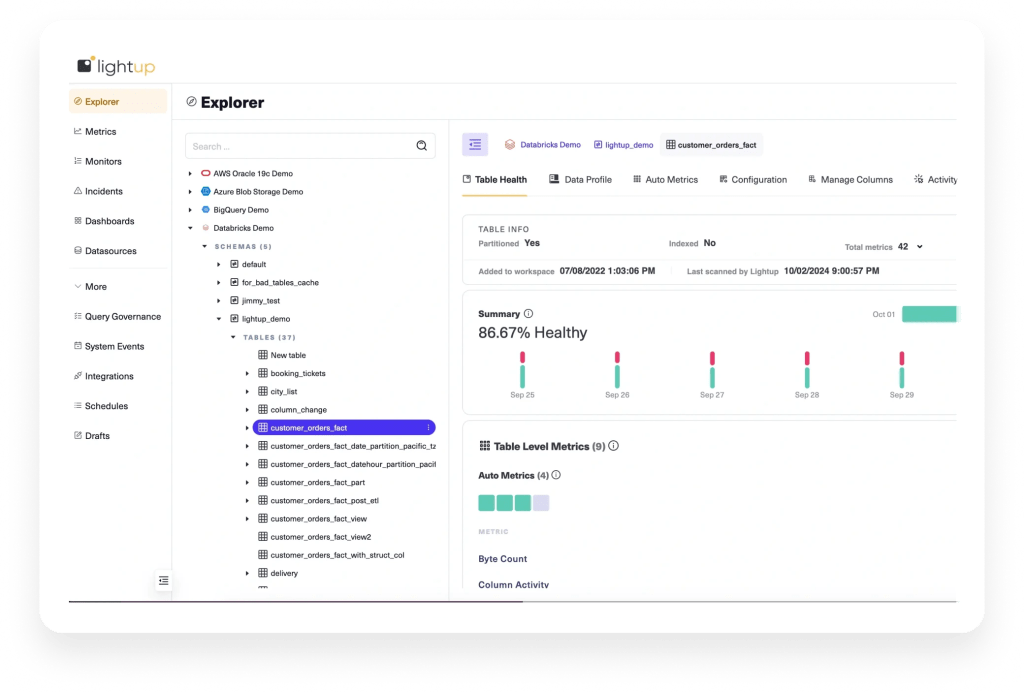

Pushdown Checks, Without Data Movement

Lightup deploys scalable Data Quality Checks 10x faster than legacy tools, optimized by:

- Aggregate queries with in-place processing at the data source, without moving or copying data.

- Time-bound pushdown queries, only using delta or incremental time-ranged data.

Partition-aware queries, only scanning specific partitions where data resides.

Lightup doesn’t scan more data than it has to, keeping every query

efficient and scalable — without choking system performance, even on massive data volumes.

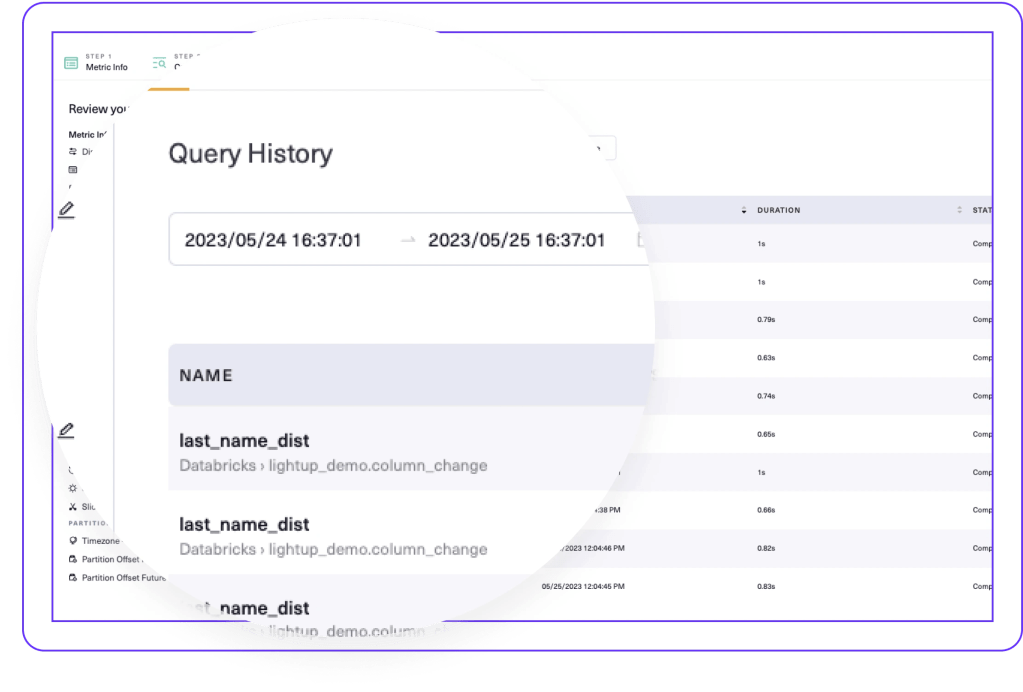

Query Governance

With Lightup, get full control over query governance, ensuring queries don’t scan too much data or run too long.

Configure Lightup to colocate resources within Databricks

while it’s on to run at optimal times, after jobs finish.

- Get comprehensive query history

- Schedule when queries run

- Bound query runtime

- Set cost budgets

Key Benefits

Reliable Data and Insights

Increase confidence in Databricks data, making it the

go-to trusted source for all data projects, increasing the reliability of analytics and insights for data-driven decision-making.

Fast Ramp-up, Fast ROI

Deploy Data Quality checks in less than 20 minutes vs. months, enabling data teams to reach Data Quality coverage goals 10x faster than legacy data quality tools — without developer cycles.

Easy Custom Queries

Create custom Data Quality queries with “SQL fragments,” simply adding business logic to system-generated assisted SQL checks — without learning a proprietary rule Engine.

Lightup Data Quality Design Patterns for Databricks

Scheduled Checks

When processing pipeline transformation operations, such as going from one delta table to another, run data quality checks on a schedule at different series of the pipelines.

Trigger Mode

When orchestrating ETL and

declaring pipeline definitions, include closed-loop response actions before processing data further — such as quarantining bad data or breaking the pipeline upon data quality failures.

Delta Live Tables

When processing high-volume streaming data with no latency, insert and deploy a structured streaming job in the Databricks

cluster, which continuously calculates DQIs as new data arrives, with sub-second data

processing and analysis.

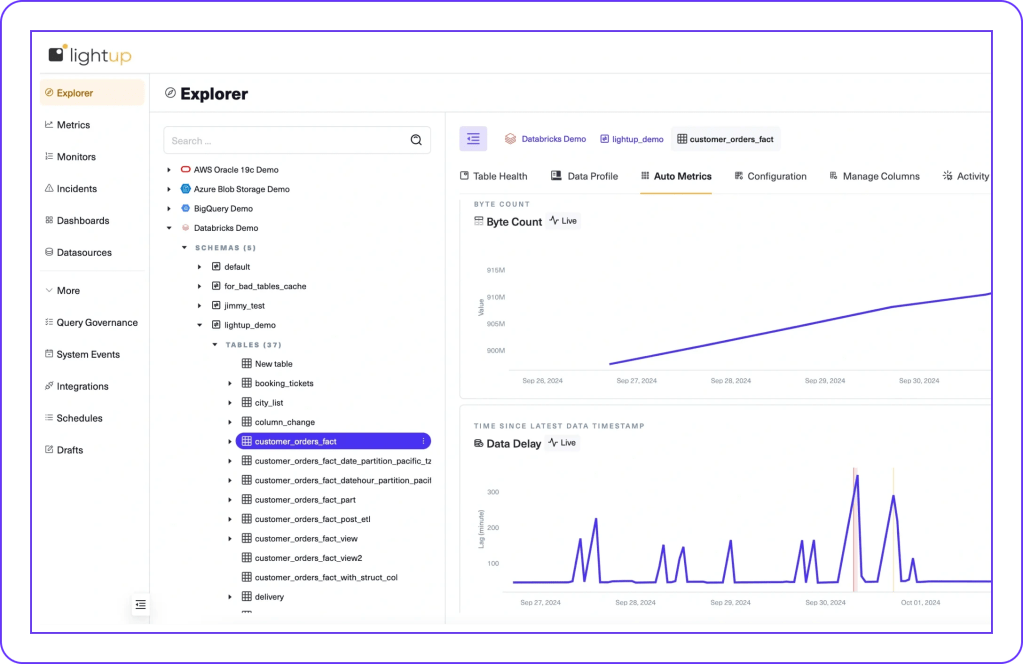

Better Together

Lightup and Databricks Integration

Simply put, Lightup Data Quality makes Databricks even better.

But don’t take our word for it.

See how Lightup and Databricks work together, request a free trial or demo today.