Today’s data-driven enterprise organizations rely heavily on data to make informed decisions, drive innovation, and gain a competitive edge. However, the value of data is intrinsically linked to its quality. Poor quality data can lead to inaccurate insights, misguided decision-making, and wasted resources.

Given the pivotal role of Data Quality within organizations, there’s an increasing demand to establish comprehensive and early Data Quality measures throughout the data life cycle.

Faced with enormous data volumes, leading enterprises are moving away from centralizing Data Quality within the IT organization. Instead, they’re decentralizing Data Quality, democratizing access to Data Quality tools and inviting broader participation from business analysts, leadership teams, data consumers, and data domain experts in the Data Quality life cycle.

What Does Democratizing Data Quality Mean?

To understand why enterprise organizations want to democratize Data Quality, we need to understand what democratizing Data Quality actually means.

Democratizing Data Quality means decentralizing Data Quality-related tasks, such as writing Data Quality Checks, developing rule definitions, deciding which checks need monitors, setting up incident alerts, and drilling into root cause analysis to prevent future issues. These tasks are traditionally handled by enterprise IT departments or data engineers.

Why does that matter? In many large enterprise organizations, there are knowledge and skills gaps between non-technical business stakeholders or data consumers who use and know the context of the data and the technical teams who manage and deploy Data Quality queries and monitors for data pipelines.

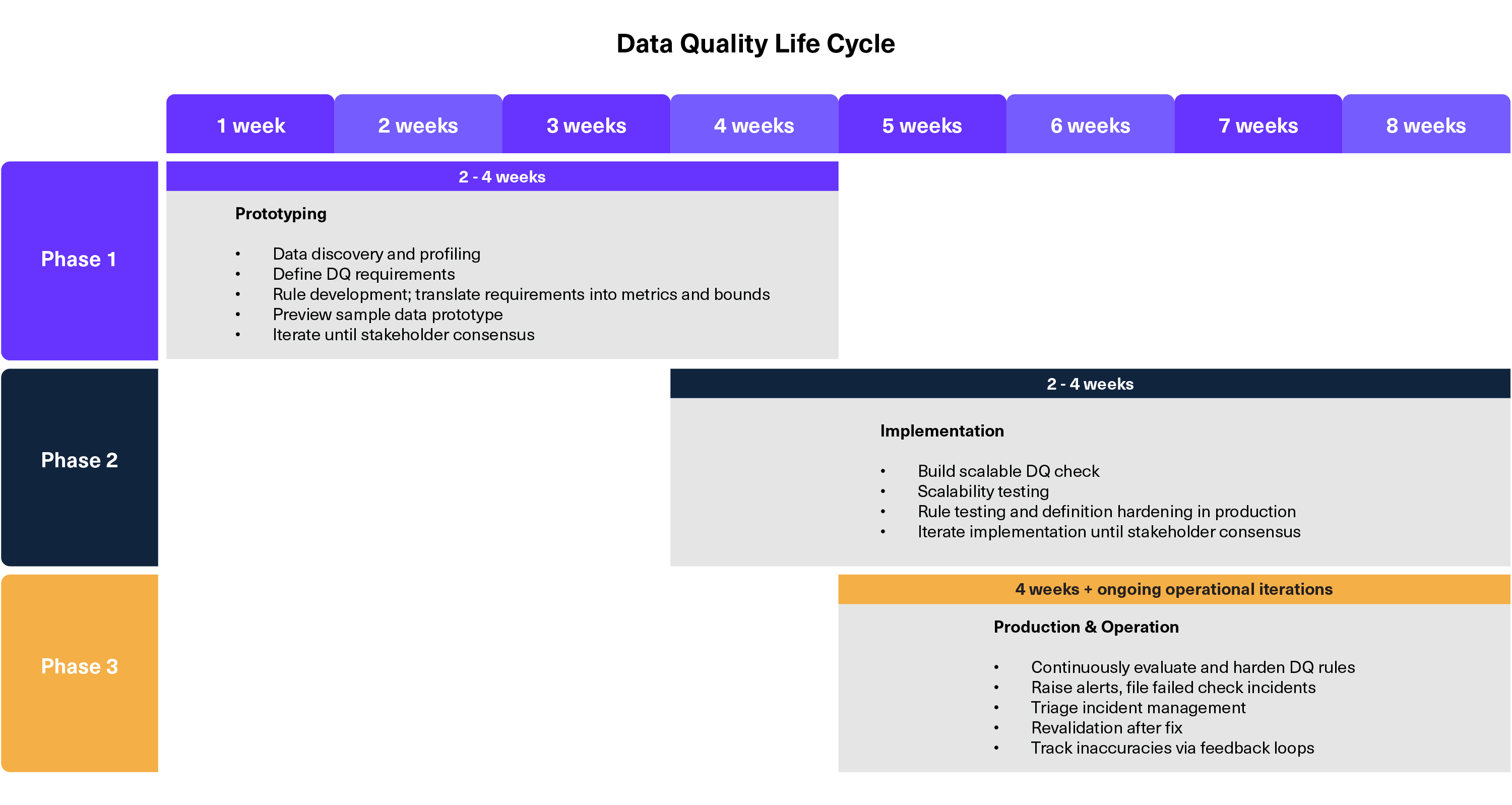

A quick peek at the typical Data Quality life cycle phases (below) reveals the reality of how long it takes to actually deploy Data Quality Checks at enterprise scale.

The first phase focuses on prototyping Data Quality Checks, which requires data discovery, profiling, and information gathering from relevant stakeholders and data domain experts. This phase can take two to four weeks as business requirements are translated into Data Quality rules and metrics.

In the second phase, Data Quality Checks are implemented. Data engineers build, test, and scale Data Quality Checks until the desired results are achieved. This can take another two to four weeks, depending on the complexity and accuracy of the rule definitions.

In the third phase, Data Quality Checks are officially put in production and operationalized. This is an ongoing feedback process that typically lasts four weeks or more. Rules are continuously hardened, failed checks are tracked, alerts are set up, incidents are triaged and remediated, and checks are revalidated as needed.

All these phases require collaboration across departments from data consumers, data domain experts, data engineers, and key stakeholders. It’s a collective effort that requires a shared understanding of the data and the business use case to develop the right metrics and rules to monitor.

Who “Owns” Data Quality Within Enterprise Organizations?

Who’s responsible for ensuring Data Quality? That’s a common lingering question that has yet to have a solid consensus.

Democratizing Data Quality means numerous teams and domain experts must collaborate, including:

- Data Engineers

Data engineers are typically responsible for designing and implementing data pipelines that extract, transform, and load (ETL) data from various sources into a central data repository.

They must ensure that their pipelines and transformations transform the data based on the standards and rules outlined by data and business analysts. Plus, they must verify that the data integrity isn’t compromised by any transformations.

- Data Analysts

While data engineers are primarily responsible for the technical aspects of Data Quality Management, data analysts focus on utilizing and analyzing data to derive meaningful insights.

They are frequently involved in cleansing data and may make decisions, such as how missing values are handled, how to standardize formats, or how to manage outliers.

- Business Analysts

The business analyst plays a crucial role in the intersection of business processes and data. Ensuring Data Quality is an integral part of their responsibilities, as it directly impacts the accuracy and reliability of the insights generated for decision-making.

Business analysts work closely with stakeholders to gather requirements for data-driven projects. They identify the critical data elements and define Data Quality requirements based on business needs. Collaborating with data engineers and data analysts, business analysts define Data Quality standards and metrics, establishing rules for data accuracy, completeness, consistency, and timeliness.

- Data Stewards

Data stewards are responsible for overseeing and managing the quality, integrity, and usage of data within an organization. Their role involves defining and enforcing data governance policies, ensuring compliance with data standards, and collaborating with various stakeholders to improve Data Quality.

As custodians of data, data stewards strive to maintain its accuracy, consistency, and reliability throughout its life cycle. They often bridge the gap between technical data management and business requirements, ensuring that data aligns with organizational goals and regulatory requirements.

- Executives

Executive leadership teams play a crucial role in shaping the strategy, culture, and priorities related to Data Quality. They define the importance of Data Quality in achieving organizational goals.

Since executives rely on data insights for strategic decision-making, they have a vested interest in the accuracy and reliability of data. They often monitor key performance indicators related to Data Quality by reviewing dashboards or reports summarizing the status of their organization’s Data Quality.

How Do Enterprise Organizations Benefit from Democratizing Data Quality?

Allowing broader access to Data Quality processes and distributing the workload across departments, enterprise organizations can improve decision-making, collaboration, and efficiency.

Here are five key benefits of democratizing Data Quality.

1. Informed Decision-Making

Democratizing Data Quality ensures that accurate and reliable information is available to decision-makers. When employees across different departments have access to high-quality data, they can make informed decisions based on a shared understanding of the business landscape.

This promotes agility and responsiveness, enabling organizations to adapt quickly to changing market conditions.

2. Easier Collaboration

When individuals from various departments can trust and share the same data, they are more likely to collaborate effectively. Democratizing Data Quality promotes a culture of openness, where insights are shared freely.

This leads to a more cohesive and united approach to achieving organizational goals.

3. Empowered Data Citizens

Democratizing Data Quality empowers individuals beyond the data science and analytics teams. Citizen data scientists, employees with domain expertise but not necessarily formal training in data science, can leverage high-quality data to derive meaningful insights.

This broader access to data enables employees to contribute to data-driven initiatives, fostering a more inclusive and innovation-driven environment.

4. Proactive, Not Reactive Data Quality

Poor Data Quality often leads to resource wastage, as organizations invest time and money in correcting errors and cleaning up messy datasets. Democratizing Data Quality helps prevent these issues at the source by involving a broader range of stakeholders in Data Quality processes.

This, in turn, leads to more efficient resource allocation, as problems can be identified and addressed proactively rather than reactively.

5. Building Data Trust

Trust is a fundamental element in any data-driven organization. By democratizing Data Quality, organizations can build trust in their data assets. When everyone in the organization has access to reliable and accurate data, there is a shared confidence in the insights derived from that data.

This trust forms the foundation for making informed decisions and driving innovation with confidence.

How Can Different Departments Work Together to Ensure Data Quality?

Data Quality initiatives involve multiple teams, requiring a collective effort with coordination and collaboration with technical and non-technical participants.

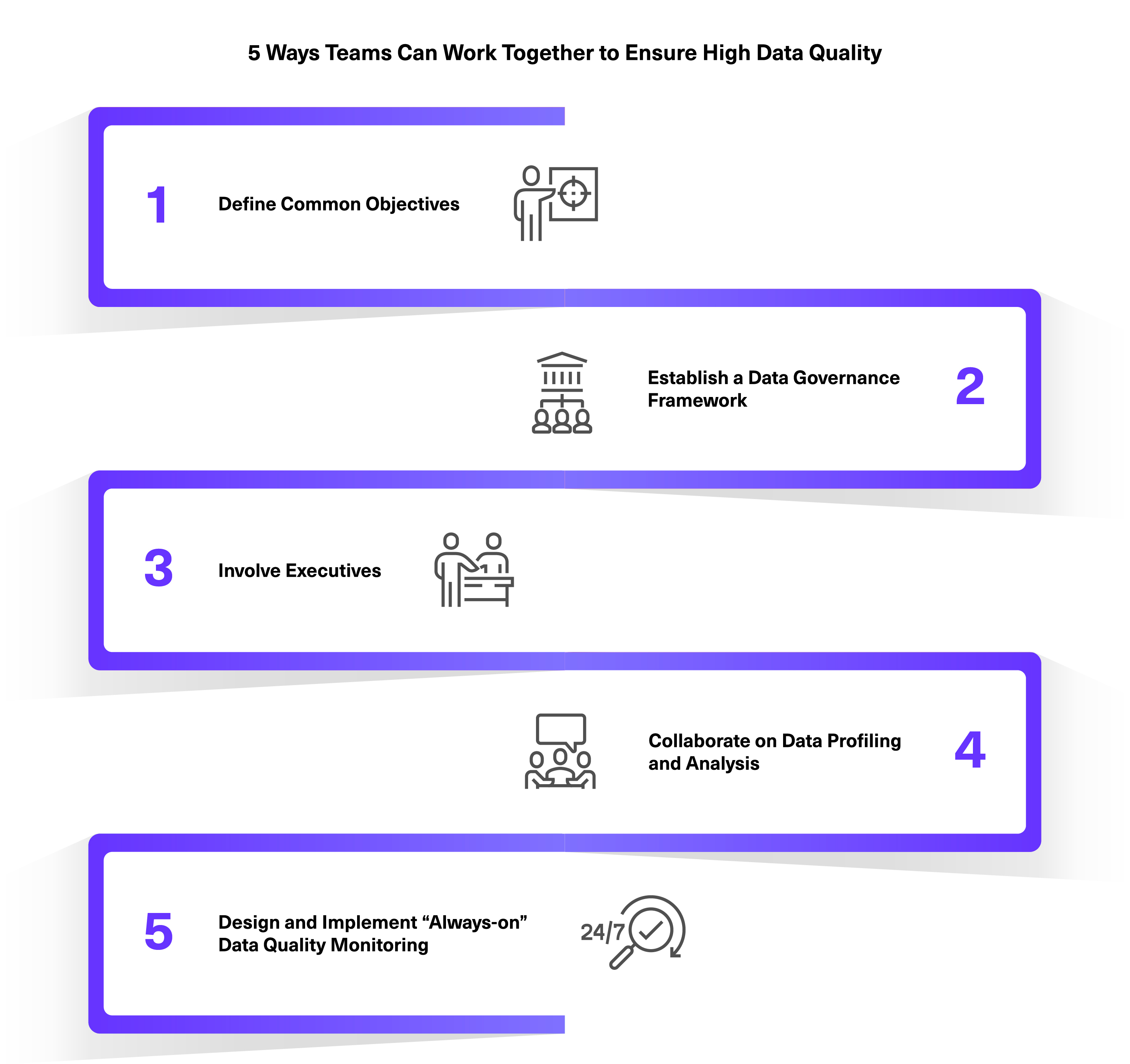

Here are the top five ways teams can work together to ensure high Data Quality and support broader user adoption.

1. Define Common Objectives

Executives, along with business and data analysts, should articulate clear objectives, including defining standards, metrics, and expectations for high-quality data that align with organizational goals.

2. Establish a Data Governance Framework

Work collaboratively to establish a robust data governance framework. Clearly define roles and responsibilities for each role, including data stewards who oversee Data Quality initiatives.

3. Involve Executives

Executives should actively support and participate in Data Quality initiatives, including reviewing Data Quality metrics, providing resources, and reinforcing the importance of Data Quality to their company culture.

4. Collaborate on Data Profiling and Analysis

Data engineers and data analysts can collaborate on data profiling to identify patterns, anomalies, and potential issues. Business analysts can provide domain expertise to interpret the data and identify relevant business rules.

5. Design and Implement “Always-on” Data Quality Monitoring

Design and implement a Data Quality Monitoring system as part of the ETL process. Business and data analysts should collaborate on the business rules that need to be enforced, and whenever possible, data engineers, data analysts, and business analysts should use the Data Quality and Data Governance tools to implement and enforce the controls and monitoring. Data Quality monitoring should be “always on” — always providing feedback and highlighting anomalies that could impact decision-making based on bad data.

Embracing a Future of Democratized Data Quality

Democratizing Data Quality isn’t just a technology initiative — it’s a cultural shift towards making data a shared asset within enterprise organizations. Aligning Data Quality with business requirements empowers organizations to maximize the value of their data-driven initiatives.

Providing universal access to high-quality data enables informed decision-making, fosters enhanced collaboration, and cultivates increased trust in the data. In this era of data, enterprise organizations that prioritize accessibility and reliability in their data will unquestionably gain a competitive edge in dynamic, fast-paced business environments.

Resources

Learn how Baker Hughes leverages Lightup to master Data Quality for global operations.

Discover how McDonald’s deploys thousands of Data Quality Checks, fast.

See firsthand why Fortune 500 companies are democratizing Data Quality in their organizations, try Lightup free for 30 days.

Questions? We’re here to help. Email us at info@lightup.ai or schedule a free strategy and demo session today.