AI Anomaly Detection

Lightup provides prebuilt AI Anomaly Detection (AD) models, specifically designed to detect Data Quality issues at scale. Developed using advanced statistical processing and machine learning (ML) techniques, Lightup’s AI Anomaly Detection has been field-tested and optimized based on real data from enterprise customers.

Powerful AI Anomaly Detection

Ensure Data Quality Expectations

Using prebuilt Anomaly Detection models, Lightup pinpoints data outliers, reveals hidden trends, and identifies business-specific seasonality for real-time insights when data fails to meet Data Quality expectations. Lightup’s Anomaly Detection includes a range of options:

- Automated Data Quality expectations

- Absolute or dynamic threshold setting

- Customizable settings to meet your desired outcomes

3 Advanced Anomaly Detection Algorithms

Proven effective and accurate by our enterprise customers, Lightup’s AI Anomaly Detection is supported by three advanced algorithms:

-

1

Values Outside Expectations -

2

Sharp Change -

3

Slow Burn Trend Change

Lightup automatically selects the most suitable algorithms for each Data Quality Indicator (DQI) defined in the system, with options to customize the Anomaly Detection configurations to meet your organization’s desired outcome.

1. Values Outside Expectations

This algorithm detects incidents where a data point does not match expectations predicted from historical patterns. Seasonality and trends observed in the DQI are taken into account when learning expectations from past data, yielding a robust monitor that accurately detects Data Quality incidents regardless of signal shape complexity.

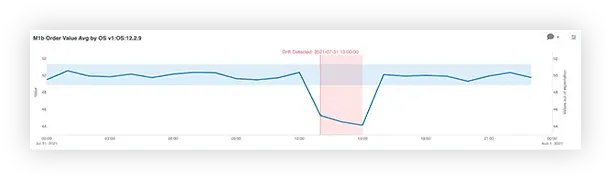

2. Sharp Change

This algorithm pinpoints incidents where a metric suddenly moves more than expected. The intuition of this algorithm knows data quality issues normally present as sharp deviations from normal DQI behavior. Any seasonality in the signal is taken into account, while ignoring small level changes regarding long-term trends.

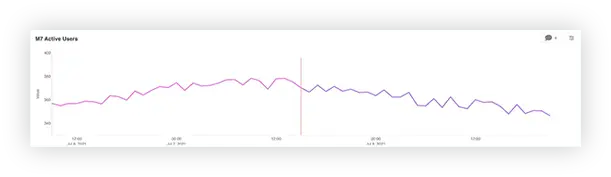

3. Slow Burn Trend Change

This algorithm detects changes in long-term metric trends, very useful for early detection of trend changes that are usually caught too late because of the slow burn nature of such trend changes. For example, if the number of users on the platform that used to grow at 1% week over week starts decaying 1% week over week, trend change would be the best algorithm to quickly spot this and take action.

Training Anomaly Detection Models

Unlike other Data Quality and Observability solutions that need to run for weeks or months to train Anomaly Detection models, Lightup uses historical data with correct Data Quality expectations to train models. To further optimize performance, Lightup includes backtesting and feedback loops, for flexible fine-tuning as you go.

For complex data elements, simply specify as many unique historical date ranges as needed to enhance seasonal awareness and accurate trend analysis.

Enhancing AI Anomaly Detection

The best part? Lightup is designed to make fine-tuning and supervising Anomaly Detection models simple, even for beginners.

Rule Preview

Lightup provides an intuitive backtesting and preview workflow that lets users quickly configure detection criteria that works for their requirements, without requiring expertise on the actual statistical models being used.

Each Data Quality rule can be backtested on historical data using the preview workflow, allowing users to assess rule performance before adding the rule to the live production environment.*

After previewing the results, users can fine-tune the rule using simple settings, such as rule aggressiveness — more aggressive to catch more incidents, less aggressive to catch fewer incidents.

Backtesting allows users to ensure the Anomaly Detection rule will accurately detect the type of Data Quality incidents they need to catch.

*Longer training periods may be needed for more complex seasonality scenarios such as yearly seasonal signals. Most DQIs show one of non-seasonal, daily, or weekly seasonal behavior.

Online Feedback

Users can pass online feedback to the Lightup system by rejecting Data Quality incidents that the system cites.

This online feedback is used to:

-

Add supervised training to the Anomaly Detection model for improved model accuracy.

Add supervised training to the Anomaly Detection model for improved model accuracy. -

Ensure ongoing accuracy of Anomaly Detection rules, with low maintenance for end users.

Ensure ongoing accuracy of Anomaly Detection rules, with low maintenance for end users.

Find hidden bad data,

before stakeholders report it.

Deliver reliable data across enterprise analytics and AI workloads with Lightup Data Quality and Data Observability solutions.